坑

必须用 docker host网络模式进行通信

目录层级

├── docker-compose.yml

├── fastdfs.tar.gz

├── nginx

│ └── nginx.conf

├── storage

│ ├── conf

│ │ └── storage.conf

│ └── data

├── store_path

│ └── data

└── tracker

├── conf

│ ├── client.conf

│ └── tracker.conf

└── data

注意

配置 TRACKER_SERVER地址为虚机或公网服务器ip

这里必须用host网络模式

如果是虚机需要手动开启防火墙

sudo firewall-cmd --zone=public --add-port=22122/tcp --permanent

sudo firewall-cmd --zone=public --add-port=23000/tcp --permanent

sudo firewall-cmd --zone=public --add-port=9800/tcp --permanent

sudo firewall-cmd --reload

docker-compose.yml

version: "3.5"

services:

fastdfs-tracker:

hostname: fastdfs-tracker

container_name: fastdfs-tracker

image: season/fastdfs:1.2

network_mode: "host"

command: tracker

volumes:

- ./tracker/data:/fastdfs/tracker/data

- ./tracker/conf:/etc/fdfs

fastdfs-storage:

hostname: fastdfs-storage

container_name: fastdfs-storage

image: season/fastdfs:1.2

network_mode: "host"

volumes:

- ./storage/data:/fastdfs/storage/data

- ./store_path:/fastdfs/store_path

- ./storage/conf/storage.conf:/fdfs_conf/storage.conf

environment:

- TRACKER_SERVER=192.168.106.132:22122

command: storage

depends_on:

- fastdfs-tracker

fastdfs-nginx:

hostname: fastdfs-nginx

container_name: fastdfs-nginx

image: season/fastdfs:1.2

network_mode: "host"

volumes:

- ./nginx/nginx.conf:/etc/nginx/conf/nginx.conf

- ./store_path:/fastdfs/store_path

environment:

- TRACKER_SERVER=192.168.106.132:22122

command: nginx

tracker.conf

# is this config file disabled

# false for enabled

# true for disabled

disabled=false

# bind an address of this host

# empty for bind all addresses of this host

bind_addr=

# the tracker server port

port=22122

# connect timeout in seconds

# default value is 30s

connect_timeout=30

# network timeout in seconds

# default value is 30s

network_timeout=60

# the base path to store data and log files

base_path=/fastdfs/tracker

# max concurrent connections this server supported

max_connections=256

# accept thread count

# default value is 1

# since V4.07

accept_threads=1

# work thread count, should <= max_connections

# default value is 4

# since V2.00

work_threads=4

# the method of selecting group to upload files

# 0: round robin

# 1: specify group

# 2: load balance, select the max free space group to upload file

store_lookup=2

# which group to upload file

# when store_lookup set to 1, must set store_group to the group name

store_group=group1

# which storage server to upload file

# 0: round robin (default)

# 1: the first server order by ip address

# 2: the first server order by priority (the minimal)

store_server=0

# which path(means disk or mount point) of the storage server to upload file

# 0: round robin

# 2: load balance, select the max free space path to upload file

store_path=0

# which storage server to download file

# 0: round robin (default)

# 1: the source storage server which the current file uploaded to

download_server=0

# reserved storage space for system or other applications.

# if the free(available) space of any stoarge server in

# a group <= reserved_storage_space,

# no file can be uploaded to this group.

# bytes unit can be one of follows:

### G or g for gigabyte(GB)

### M or m for megabyte(MB)

### K or k for kilobyte(KB)

### no unit for byte(B)

### XX.XX% as ratio such as reserved_storage_space = 10%

reserved_storage_space = 10%

#standard log level as syslog, case insensitive, value list:

### emerg for emergency

### alert

### crit for critical

### error

### warn for warning

### notice

### info

### debug

log_level=info

#unix group name to run this program,

#not set (empty) means run by the group of current user

run_by_group=

#unix username to run this program,

#not set (empty) means run by current user

run_by_user=

# allow_hosts can ocur more than once, host can be hostname or ip address,

# "*" means match all ip addresses, can use range like this: 10.0.1.[1-15,20] or

# host[01-08,20-25].domain.com, for example:

# allow_hosts=10.0.1.[1-15,20]

# allow_hosts=host[01-08,20-25].domain.com

allow_hosts=*

# sync log buff to disk every interval seconds

# default value is 10 seconds

sync_log_buff_interval = 10

# check storage server alive interval seconds

check_active_interval = 120

# thread stack size, should >= 64KB

# default value is 64KB

thread_stack_size = 64KB

# auto adjust when the ip address of the storage server changed

# default value is true

storage_ip_changed_auto_adjust = true

# storage sync file max delay seconds

# default value is 86400 seconds (one day)

# since V2.00

storage_sync_file_max_delay = 86400

# the max time of storage sync a file

# default value is 300 seconds

# since V2.00

storage_sync_file_max_time = 300

# if use a trunk file to store several small files

# default value is false

# since V3.00

use_trunk_file = false

# the min slot size, should <= 4KB

# default value is 256 bytes

# since V3.00

slot_min_size = 256

# the max slot size, should > slot_min_size

# store the upload file to trunk file when it's size <= this value

# default value is 16MB

# since V3.00

slot_max_size = 16MB

# the trunk file size, should >= 4MB

# default value is 64MB

# since V3.00

trunk_file_size = 64MB

# if create trunk file advancely

# default value is false

# since V3.06

trunk_create_file_advance = false

# the time base to create trunk file

# the time format: HH:MM

# default value is 02:00

# since V3.06

trunk_create_file_time_base = 02:00

# the interval of create trunk file, unit: second

# default value is 38400 (one day)

# since V3.06

trunk_create_file_interval = 86400

# the threshold to create trunk file

# when the free trunk file size less than the threshold, will create

# the trunk files

# default value is 0

# since V3.06

trunk_create_file_space_threshold = 20G

# if check trunk space occupying when loading trunk free spaces

# the occupied spaces will be ignored

# default value is false

# since V3.09

# NOTICE: set this parameter to true will slow the loading of trunk spaces

# when startup. you should set this parameter to true when neccessary.

trunk_init_check_occupying = false

# if ignore storage_trunk.dat, reload from trunk binlog

# default value is false

# since V3.10

# set to true once for version upgrade when your version less than V3.10

trunk_init_reload_from_binlog = false

# if use storage ID instead of IP address

# default value is false

# since V4.00

use_storage_id = false

# specify storage ids filename, can use relative or absolute path

# since V4.00

storage_ids_filename = storage_ids.conf

# id type of the storage server in the filename, values are:

## ip: the ip address of the storage server

## id: the server id of the storage server

# this paramter is valid only when use_storage_id set to true

# default value is ip

# since V4.03

id_type_in_filename = ip

# if store slave file use symbol link

# default value is false

# since V4.01

store_slave_file_use_link = false

# if rotate the error log every day

# default value is false

# since V4.02

rotate_error_log = false

# rotate error log time base, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

# default value is 00:00

# since V4.02

error_log_rotate_time=00:00

# rotate error log when the log file exceeds this size

# 0 means never rotates log file by log file size

# default value is 0

# since V4.02

rotate_error_log_size = 0

# if use connection pool

# default value is false

# since V4.05

use_connection_pool = false

# connections whose the idle time exceeds this time will be closed

# unit: second

# default value is 3600

# since V4.05

connection_pool_max_idle_time = 3600

# HTTP port on this tracker server

http.server_port=8080

# check storage HTTP server alive interval seconds

# <= 0 for never check

# default value is 30

http.check_alive_interval=30

# check storage HTTP server alive type, values are:

# tcp : connect to the storge server with HTTP port only,

# do not request and get response

# http: storage check alive url must return http status 200

# default value is tcp

http.check_alive_type=tcp

# check storage HTTP server alive uri/url

# NOTE: storage embed HTTP server support uri: /status.html

http.check_alive_uri=/status.html

client.conf 这里修改ip为虚机或服务器

# connect timeout in seconds

# default value is 30s

connect_timeout=30

# network timeout in seconds

# default value is 30s

network_timeout=60

# the base path to store log files

base_path=/fastdfs/client

# tracker_server can ocur more than once, and tracker_server format is

# "host:port", host can be hostname or ip address

# 需要修改此处 ip

tracker_server=192.168.106.132:22122

#standard log level as syslog, case insensitive, value list:

### emerg for emergency

### alert

### crit for critical

### error

### warn for warning

### notice

### info

### debug

log_level=info

# if use connection pool

# default value is false

# since V4.05

use_connection_pool = false

# connections whose the idle time exceeds this time will be closed

# unit: second

# default value is 3600

# since V4.05

connection_pool_max_idle_time = 3600

# if load FastDFS parameters from tracker server

# since V4.05

# default value is false

load_fdfs_parameters_from_tracker=false

# if use storage ID instead of IP address

# same as tracker.conf

# valid only when load_fdfs_parameters_from_tracker is false

# default value is false

# since V4.05

use_storage_id = false

# specify storage ids filename, can use relative or absolute path

# same as tracker.conf

# valid only when load_fdfs_parameters_from_tracker is false

# since V4.05

storage_ids_filename = storage_ids.conf

#HTTP settings

http.tracker_server_port=80

#use "#include" directive to include HTTP other settiongs

##include http.conf

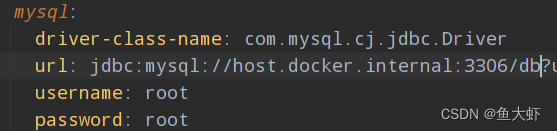

storage.conf 这里修改ip为虚机或服务器

# is this config file disabled

# false for enabled

# true for disabled

disabled=false

# the name of the group this storage server belongs to

group_name=group1

# bind an address of this host

# empty for bind all addresses of this host

bind_addr=

# if bind an address of this host when connect to other servers

# (this storage server as a client)

# true for binding the address configed by above parameter: "bind_addr"

# false for binding any address of this host

client_bind=true

# the storage server port

port=23000

# connect timeout in seconds

# default value is 30s

connect_timeout=30

# network timeout in seconds

# default value is 30s

network_timeout=60

# heart beat interval in seconds

heart_beat_interval=30

# disk usage report interval in seconds

stat_report_interval=60

# the base path to store data and log files

base_path=/fastdfs/storage

# max concurrent connections the server supported

# default value is 256

# more max_connections means more memory will be used

max_connections=256

# the buff size to recv / send data

# this parameter must more than 8KB

# default value is 64KB

# since V2.00

buff_size = 256KB

# accept thread count

# default value is 1

# since V4.07

accept_threads=1

# work thread count, should <= max_connections

# work thread deal network io

# default value is 4

# since V2.00

work_threads=4

# if disk read / write separated

## false for mixed read and write

## true for separated read and write

# default value is true

# since V2.00

disk_rw_separated = true

# disk reader thread count per store base path

# for mixed read / write, this parameter can be 0

# default value is 1

# since V2.00

disk_reader_threads = 1

# disk writer thread count per store base path

# for mixed read / write, this parameter can be 0

# default value is 1

# since V2.00

disk_writer_threads = 1

# when no entry to sync, try read binlog again after X milliseconds

# must > 0, default value is 200ms

sync_wait_msec=50

# after sync a file, usleep milliseconds

# 0 for sync successively (never call usleep)

sync_interval=0

# storage sync start time of a day, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

sync_start_time=00:00

# storage sync end time of a day, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

sync_end_time=23:59

# write to the mark file after sync N files

# default value is 500

write_mark_file_freq=500

# path(disk or mount point) count, default value is 1

store_path_count=1

# store_path#, based 0, if store_path0 not exists, it's value is base_path

# the paths must be exist

store_path0=/fastdfs/store_path

#store_path1=/home/yuqing/fastdfs2

# subdir_count * subdir_count directories will be auto created under each

# store_path (disk), value can be 1 to 256, default value is 256

subdir_count_per_path=256

# tracker_server can ocur more than once, and tracker_server format is

# "host:port", host can be hostname or ip address

tracker_server=192.168.106.132:22122

#standard log level as syslog, case insensitive, value list:

### emerg for emergency

### alert

### crit for critical

### error

### warn for warning

### notice

### info

### debug

log_level=info

#unix group name to run this program,

#not set (empty) means run by the group of current user

run_by_group=

#unix username to run this program,

#not set (empty) means run by current user

run_by_user=

# allow_hosts can ocur more than once, host can be hostname or ip address,

# "*" means match all ip addresses, can use range like this: 10.0.1.[1-15,20] or

# host[01-08,20-25].domain.com, for example:

# allow_hosts=10.0.1.[1-15,20]

# allow_hosts=host[01-08,20-25].domain.com

allow_hosts=*

# the mode of the files distributed to the data path

# 0: round robin(default)

# 1: random, distributted by hash code

file_distribute_path_mode=0

# valid when file_distribute_to_path is set to 0 (round robin),

# when the written file count reaches this number, then rotate to next path

# default value is 100

file_distribute_rotate_count=100

# call fsync to disk when write big file

# 0: never call fsync

# other: call fsync when written bytes >= this bytes

# default value is 0 (never call fsync)

fsync_after_written_bytes=0

# sync log buff to disk every interval seconds

# must > 0, default value is 10 seconds

sync_log_buff_interval=10

# sync binlog buff / cache to disk every interval seconds

# default value is 60 seconds

sync_binlog_buff_interval=10

# sync storage stat info to disk every interval seconds

# default value is 300 seconds

sync_stat_file_interval=300

# thread stack size, should >= 512KB

# default value is 512KB

thread_stack_size=512KB

# the priority as a source server for uploading file.

# the lower this value, the higher its uploading priority.

# default value is 10

upload_priority=10

# the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a

# multi aliases split by comma. empty value means auto set by OS type

# default values is empty

if_alias_prefix=

# if check file duplicate, when set to true, use FastDHT to store file indexes

# 1 or yes: need check

# 0 or no: do not check

# default value is 0

check_file_duplicate=0

# file signature method for check file duplicate

## hash: four 32 bits hash code

## md5: MD5 signature

# default value is hash

# since V4.01

file_signature_method=hash

# namespace for storing file indexes (key-value pairs)

# this item must be set when check_file_duplicate is true / on

key_namespace=FastDFS

# set keep_alive to 1 to enable persistent connection with FastDHT servers

# default value is 0 (short connection)

keep_alive=0

# you can use "#include filename" (not include double quotes) directive to

# load FastDHT server list, when the filename is a relative path such as

# pure filename, the base path is the base path of current/this config file.

# must set FastDHT server list when check_file_duplicate is true / on

# please see INSTALL of FastDHT for detail

##include /home/yuqing/fastdht/conf/fdht_servers.conf

# if log to access log

# default value is false

# since V4.00

use_access_log = false

# if rotate the access log every day

# default value is false

# since V4.00

rotate_access_log = false

# rotate access log time base, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

# default value is 00:00

# since V4.00

access_log_rotate_time=00:00

# if rotate the error log every day

# default value is false

# since V4.02

rotate_error_log = false

# rotate error log time base, time format: Hour:Minute

# Hour from 0 to 23, Minute from 0 to 59

# default value is 00:00

# since V4.02

error_log_rotate_time=00:00

# rotate access log when the log file exceeds this size

# 0 means never rotates log file by log file size

# default value is 0

# since V4.02

rotate_access_log_size = 0

# rotate error log when the log file exceeds this size

# 0 means never rotates log file by log file size

# default value is 0

# since V4.02

rotate_error_log_size = 0

# if skip the invalid record when sync file

# default value is false

# since V4.02

file_sync_skip_invalid_record=false

# if use connection pool

# default value is false

# since V4.05

use_connection_pool = false

# connections whose the idle time exceeds this time will be closed

# unit: second

# default value is 3600

# since V4.05

connection_pool_max_idle_time = 3600

# use the ip address of this storage server if domain_name is empty,

# else this domain name will ocur in the url redirected by the tracker server

http.domain_name=

# the port of the web server on this storage server

http.server_port=8888

java连接测试

<!--FastDFS客户端程序包-->

<dependency>

<groupId>net.oschina.zcx7878</groupId>

<artifactId>fastdfs-client-java</artifactId>

<version>1.27.0.0</version>

</dependency>

import org.csource.common.NameValuePair;

import org.csource.fastdfs.*;

import org.springframework.core.io.ClassPathResource;

import top.imuster.file.provider.file.FastDFSFile;

import java.io.ByteArrayInputStream;

import java.io.InputStream;

/**

* 实现FastDFS文件管理

* 文件上传

* 文件删除

* 文件下载

* 文件信息获取

* Storage信息获取

* Tracker信息获取

*/

public class FastDFSUtil {

/**

* @Description: 加载Tracker链接信息

* @Author: lpf

* @Date: 2019/12/23 12:29

**/

static {

try {

//查找classpath下的文件路径

String fileNameConf = new ClassPathResource("fdfs_client.conf").getPath();

//加载Tracker链接信息

ClientGlobal.init(fileNameConf);

} catch (Exception e) {

e.printStackTrace();

}

}

/**

* @Description: 文件上传实现

* @param fastDFSFile :上传的文件信息封装

**/

public static String[] upload(FastDFSFile fastDFSFile) throws Exception {

//附加参数

NameValuePair[] meta_list = new NameValuePair[1];

meta_list[0] = new NameValuePair("author",fastDFSFile.getAuthor());

//获取TrackerServer

TrackerServer trackerServer = getTrackerServer();

//获取StorageClient

StorageClient storageClient = getStorageClient(trackerServer);

/**

* 通过StorageClient访问Storage,实现文件上传,并且获取文件上传后的存储信息

* 函数的参数

* 1.上传文件的字节数组

* 2.文件的拓展名

* 3.附加参数 比如拍摄地点:北京

* uploads[]

* upload[0]:文件上传所存储的Storage的组的名字 group1

* upload[1]:文件存储到Storage上的文件的名字 M00/02/44/1.jpg

**/

String[] uploads = storageClient.upload_file(fastDFSFile.getContent(), fastDFSFile.getExt(), meta_list);

return uploads;

}

/***

* @Description: 获取文件信息

* @param groupName :文件的组名 group1

* @param remoteFileName :文件的存储路径名字 M00/02/44/1.jpg

* @reture:

**/

public static FileInfo getFile(String groupName, String remoteFileName) throws Exception{

//获取TrackerServer

TrackerServer trackerServer = getTrackerServer();

//获取StorageClient

StorageClient storageClient = getStorageClient(trackerServer);

//获取文件信息

return storageClient.get_file_info(groupName, remoteFileName);

}

/**

* @Description: 文件下载

* @param groupName :文件的组名 group1

* @param remoteFileName :文件的存储路径名字 M00/02/44/1.jpg

* @reture: void

**/

public static InputStream downloadFile(String groupName, String remoteFileName) throws Exception{

//获取TrackerServer

TrackerServer trackerServer = getTrackerServer();

//获取StorageClient

StorageClient storageClient = getStorageClient(trackerServer);

//文件下载

byte[] buffer = storageClient.download_file(groupName, remoteFileName);

return new ByteArrayInputStream(buffer);

}

/**

* @Description: 删除文件

* @param groupName :文件的组名 group1

* @param remoteFileName :文件的存储路径名字 M00/02/44/1.jpg

* @reture: void

**/

public static void deleteFile(String groupName, String remoteFileName) throws Exception{

//获取TrackerServer

TrackerServer trackerServer = getTrackerServer();

//获取StorageClient

StorageClient storageClient = getStorageClient(trackerServer);

//删除文件

storageClient.delete_file(groupName, remoteFileName);

}

/**

* @Description: 获取storage信息

* @reture: 返回storage信息

**/

public static StorageServer getStorage() throws Exception{

//创建一个TrackerClient对象,通过TrackerClient对象访问TrackerServer

TrackerClient trackerClient = new TrackerClient();

//通过TrackerClient获取TrackerServer的链接对象

TrackerServer trackerServer = trackerClient.getConnection();

//获取storage信息

return trackerClient.getStoreStorage(trackerServer);

}

/**

* @Description: 获取storage信息的端口和IP信息

* @reture: 返回storage的IP和端口信息

**/

public static ServerInfo[] getServerInfo (String groupName, String remoteFileName) throws Exception{

//创建一个TrackerClient对象,通过TrackerClient对象访问TrackerServer

TrackerClient trackerClient = new TrackerClient();

//通过TrackerClient获取TrackerServer的链接对象

TrackerServer trackerServer = trackerClient.getConnection();

//获取Storage的IP和端口信息

return trackerClient.getFetchStorages(trackerServer, groupName, groupName);

}

/**

* @Description: 获取Tracker的信息

* @param

* @reture: void

**/

public static String getTrackerInfo() throws Exception{

//获取TrackerServer

TrackerServer trackerServer = getTrackerServer();

//Tracker的IP,Http端口

String ip = trackerServer.getInetSocketAddress().getHostString();

int httpPort = ClientGlobal.getG_tracker_http_port();

String url = "http://" + ip + ":" + httpPort;

return url;

}

/**

* @Description: 获取TrackerServer(防止冗余)

* @param

* @reture: org.csource.fastdfs.TrackerServer

**/

public static TrackerServer getTrackerServer() throws Exception{

//创建一个TrackerClient对象,通过TrackerClient对象访问TrackerServer

TrackerClient trackerClient = new TrackerClient();

//通过TrackerClient获取TrackerServer的链接对象

TrackerServer trackerServer = trackerClient.getConnection();

return trackerServer;

}

/**

* @Description: 获取StorageClient

* @param trackerServer

* @reture: org.csource.fastdfs.TrackerServer

**/

public static StorageClient getStorageClient(TrackerServer trackerServer) throws Exception {

StorageClient storageClient = new StorageClient(trackerServer,null);

return storageClient;

}

//测试

public static void main(String[] args) throws Exception{

/*FileInfo fileInfo = getFile("group1", "M00/00/00/rBgYGV4AkH-AafqxAAGGegCgxtc834.jpg");

System.out.println(fileInfo.getSourceIpAddr());

System.out.println(fileInfo.getFileSize());*/

/* //文件下载

InputStream is = downloadFile("group1", "M00/00/00/rBgYGV4Ag3aACWPGAAIsTF3RcOs943.jpg");

//将文件写入到本地磁盘

FileOutputStream os = new FileOutputStream("D:\\tool\\images\\test_download\\1.jpg");

//定义一个缓冲区

byte[] buffer = new byte[1024];

while (is.read(buffer) != -1) {

os.write(buffer);

}

os.flush(); //刷新缓冲区

os.close();

is.close();*/

//测试文件删除

//deleteFile("group1", "M00/00/00/rBgYGV4AijSAD9iqAAIVMmsTSos650.jpg");

//获取storage信息

/*StorageServer storageServer = getStorage();

System.out.println(storageServer.getStorePathIndex());

System.out.println(storageServer.getInetSocketAddress().getHostString()); //IP信息*/

//获取Storage组的IP和端口信息

/*ServerInfo[] groups = getServerInfo("group1", "M00/00/00/rBgYGV4AkH-AafqxAAGGegCgxtc834.jpg");

System.out.println(groups);

for (ServerInfo group : groups) {

System.out.println(group.getIpAddr());

System.out.println(group.getPort());

}*/

//获取tracker的信息

//System.out.println(getTrackerInfo());

}

}

public static void main(String[] args) {

try{

//封装文件信息

File file = new File("D:\\1.png");

FileInputStream inputStream = new FileInputStream(file);

byte[] bytes = IoUtil.readBytes(inputStream);

FastDFSFile fastDFSFile = new FastDFSFile(

"1.png", //文件名 1.jpg

bytes, //文件的字节数组

org.springframework.util.StringUtils.getFilenameExtension("1.png") //获取文件拓展名

);

//调用FastDFSUtil工具类将文件上传到FastDFS中

String[] uploads = FastDFSUtil.upload(fastDFSFile);

//拼接访问地址 url = http://192.168.106.132:9800/group1/M00/00/00/CgAQCmYp93GARnrvAAAWcPkOFKA731.png

//String url = "http://192.168.106.132:9800/" + uploads[0] + "/" + uploads[1];

Message<Object> bySuccess = Message.createBySuccess(uploads[0] + "/" + uploads[1]);

System.out.println(uploads[0] + "/" + uploads[1]);

}catch (Exception e){

e.printStackTrace();

}

}